What is Deepfake?

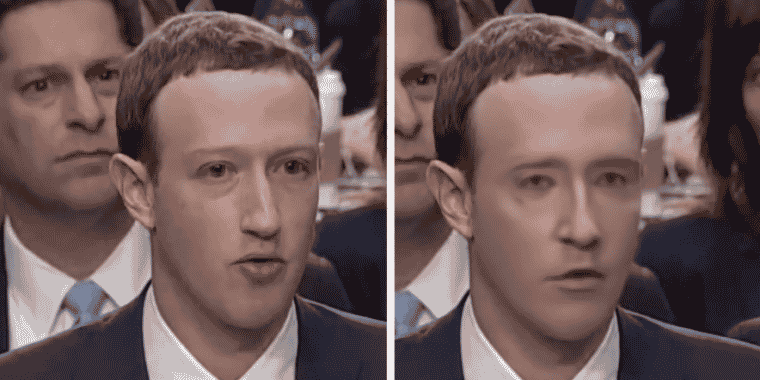

If you’re not already familiar with the term, “deepfakes,” they are hyper-realistic created films depicting events that never took place. Although they’re occasionally manufactured for well-intentioned chuckles, they’re frequently constructed to propagate ideological disinformation or have it look as if somebody featured in pornographic material they really had no idea about (i.e. “inadvertent pornographic”). Following the 2020 national elections, some made deepfakes of politicians “uttering” shocking stuff; many have technologies like Colab’s to make “vengeful sexually explicit material” featuring past love rivals. Sophisticated retouching techniques, as well as machine learning processes, are used to create digital illusions like these, which may be trained on Colab’s powerful computers. A face detection technology is often used, allowing the operator to make it look as though the intended individual is exhibiting particular facial features or speaking particular words. When it pertains to false information and pornography, the options are nearly limitless, which may be deadly. Many Colab customers may have misused their authority because of the enormous authority that accompanies it. Because of ethical considerations, Google decided to prevent an issue from arising. Whether or not it’s the latter, we’re dealing with a major tech business here, I don’t know. Due to the high cost of computing resources required to do deepfake learning, it’s possible that Google implemented the prohibition purely for economic grounds. Deepfake training was outlawed by Google midway through May, for whatever reason.

Decryption, bitcoin processing, or torrenting were also outlawed by the corporation, which sensibly doesn’t want to be identified with these actions. “You could be running malware that is forbidden, and this might limit your capacity to utilize Colab hereafter,” says the error message for anybody trying to participate in these activities. As media outlets note that deepfake fans sometimes recommend newcomers to Colab so that they may show off their skills at producing highly-detailed phony media themselves. Accordingly, this indicates that Google’s prohibition could have far-reaching consequences for the “network” of deepfake creators, whether or not particular programmers have malicious intents.