Though the above skills are a must for a Data Scientist sometimes he/she gets into a situation where they need to build up a model on their local machine in a different environment or they might get some perfect data. So in such cases, a Data scientist needs some necessary CS skills to implement the task and make the work accessible for other engineers

Important Tools For Data Science

The tools which I am going to list down below may not stand true in all situations but in my opinion, they are gonna ease your work as a Data Scientist. Here it will be explained how they can help you out in becoming a better Data Scientist having ease in creating a production-ready app other than filthy and analytic notebooks on your local computers.

1. Elastic search

A Data Scientist working at a Fortune 50 company will encounter a ton of search use cases that’s where Elastisearch is needed, it is a very important framework that helps in dealing with search/NPL use cases. Elastic facilitates you by providing suitable python clients other than building something from scratch in python. It provides a scalable and faults less approach in searching and indexing documents. The larger the data, the more node spinning takes place and the faster the query execution happens.

Elastic provides you custom plugins and tons of whistles and bells for the polyglot analyzer, forasmuch as it definitely provides a similarity comparison between query and documents in the index, it could be used for document similarity comparison. I will prefer Elasticsearch rather than importing TF-IDF from sci-kit learn.

2. REST API

After edifying your model DS needs to train its model in a shared environment because if they don’t do it then the model will be available only to them. So in order to have an actual service production from the model, Data Scientist needs to make it available through a standard API call or anything portable for the development of the application.

3. Linux

It is known to every Data Scientist that a huge part of Data Science is done through programming, so it is well known that the code will be developed and brought to specific actions on Linux. So having knowledge of CLI(Command Line Interface) adds up an advantage to a Data Scientist. In a similar way to data science, Python also deals with the framework management/package, your path, environment variables, and many more things that are done through the command line.

4. Docker and Kubernetes

Docker is an open-source project which facilitates the deployment of applications as portable, self-sufficient containers which can run on the cloud or another place, it helps users for having a production-ready application environment without configuring a production server critically for every running service on it. Docker containers are lighter because they run on the same kernel as the host, unlike virtual machines which tend to install the full operating system.

Since the market is focusing on more containerized applications, having knowledge of docker is essential, docker facilitates both training and deploying the model. The models can be containerized as a service having the environment needed to run them and providing smooth interaction with other services of the application.

Kubernetes, also written as K8s is an open-source container consonance system that offers automated deployment, management, and scaling of containerized applications over multiple hosts, it was designed by Google but is managed by the Cloud Native Computing Foundation. On this platform, you can easily manage and deploy your Docker containers across a horizontally scalable cluster. Since machine learning and data science is getting integrated with containerized development, having knowledge of these skills is important for Data Scientist.

5. Apache Airflow

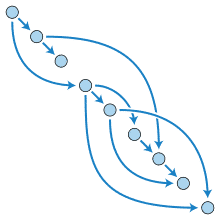

Apache airflow can be defined as a platform that facilitates monitoring workflow and programmatically author schedule. It is among one of the best workflow management systems, it makes your workflow a little bit simple and organized by allowing you to divide it into small independent task modules.

Airflow also provides a very good set of command-line utilities which can be used to perform complex operations on DAG (Directed Acyclic Graph). I mean you can make your bash or python script to run on your call, airflow provides a sway for scheduled tasks with a good interface.

Point to remember

We know that how different tools are changing rapidly especially in the fields of Data Science, Machine Learning, and AI, new and upgraded tools come very rapidly, these above-mentioned tools are in use and there are more to come. And the key is, to get updated every time to shine in these fields.